3 popular A/B tests we ran that didn’t do anything for us

Back in 2006, I was wandering the streets of Cusco, Peru. I had plans to hike the Inca Trail the next day and was looking for some last-minute supplies.

In the Plaza de Armas, the main square where all the tourists hang out, a little girl came running up to me.

“Mister! Mister! I made these for you.”

She pulled out three yarn finger puppets: a duck, a vulture, and an alpaca.

“Wow! For me?” I asked.

“Si.”

She put them in my hand and closed my fingers around them. I started to put them in my pocket, when she glared at me and made the universal hand signal for money. I took a few Peruvian soles out of my pocket and gave them to her. She ran off.

My new finger puppets!

A few days after I returned from my hike, I saw the girl again. She approached another backpacker and showed him some knitted goods. He didn’t look interested and walked away.

She made a huge blunder. She had a proven sales method that had worked on me. Why change it? That mistake probably cost the little girl her dinner.

This is the dirty secret A/B test fanatics don’t talk about: When you have something that works, keep doing it!

Companies like Google, Facebook, or Amazon can afford to run small tests. With billions of customers, an extra fraction of a penny on each customer is huge payoff. It’s worth the time and money to do it.

But if you have two customers and your life depends on converting one, you stick with what works. You can’t afford to gamble on a test. Even if it’s a so-called best practice.

Today, I want to show you 3 “best practices” we tested at IWT.

A/B test fanatics swear these hacks will bring more customers through the door. But for us, they all ended up being complete wastes of time.

Read our results and see for yourself if you want to run them or if your time is better spent on other things.

Test # 1: Minimalist vs. busy homepage

If you do a Google search on web page design, this is what might come up:

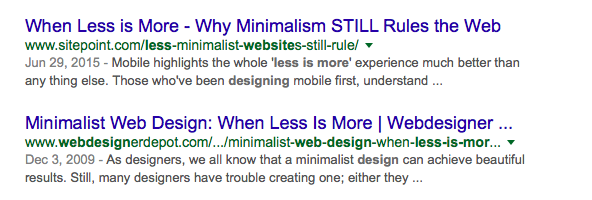

I’ll save you the time of reading all these articles. In short, they say that having more options on a page distracts people from your main call to action. And that a lot of visitors are on their smartphones, so having too much going on will kill your conversions.

We decided to put this theory to the test.

In the control corner, we had our defending heavyweight champion that has been working well for years:

Then we brought in the young-gun, minimalist challenger:

After 1 week, the minimalist version bombed. It sank conversions by 11%, and we killed the test.

The control with (gasp!) 4 calls to action on the page — plus navigation bar links — remained the undisputed winner.

Lessons learned: Removing links on the page is a standard conversion hack. But it didn’t work out for us.

The reason, we think, is because our traffic varies so much. We have content about personal finance, careers, freelancing, and more. Having the secondary call to actions at the bottom lets people select the one that interests them most.

So before you go and run this test, think long and hard: Is it right for your business? Can you afford to gamble losing 11% of your customers for a week in hopes of striking the jackpot?

Test #2: Features vs. benefits

I’ve said it here on GrowthLab. You’ve probably read it in other places: You must sell benefits and not features.

For example:

- A bed doesn’t sell. But a good night’s sleep and waking up refreshed and ready for work does

- Makeup is just a combo of different chemicals. But using it makes you feel more beautiful, attractive, and confident

- A gym membership is a piece of paper. But it lets people dream about having visible abs one day

And the more specific the benefit, the better.

Or so they say. We had to find out.

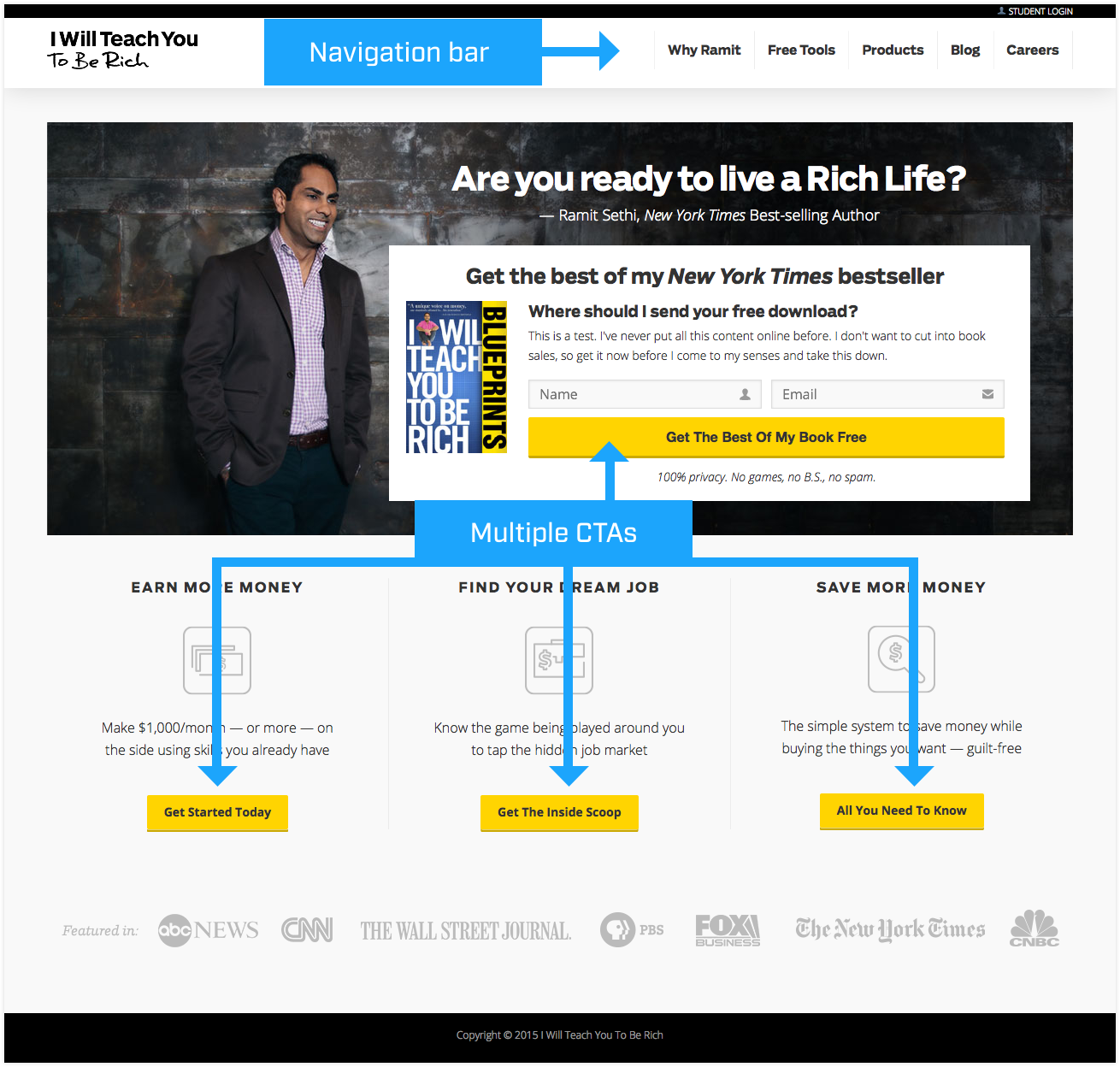

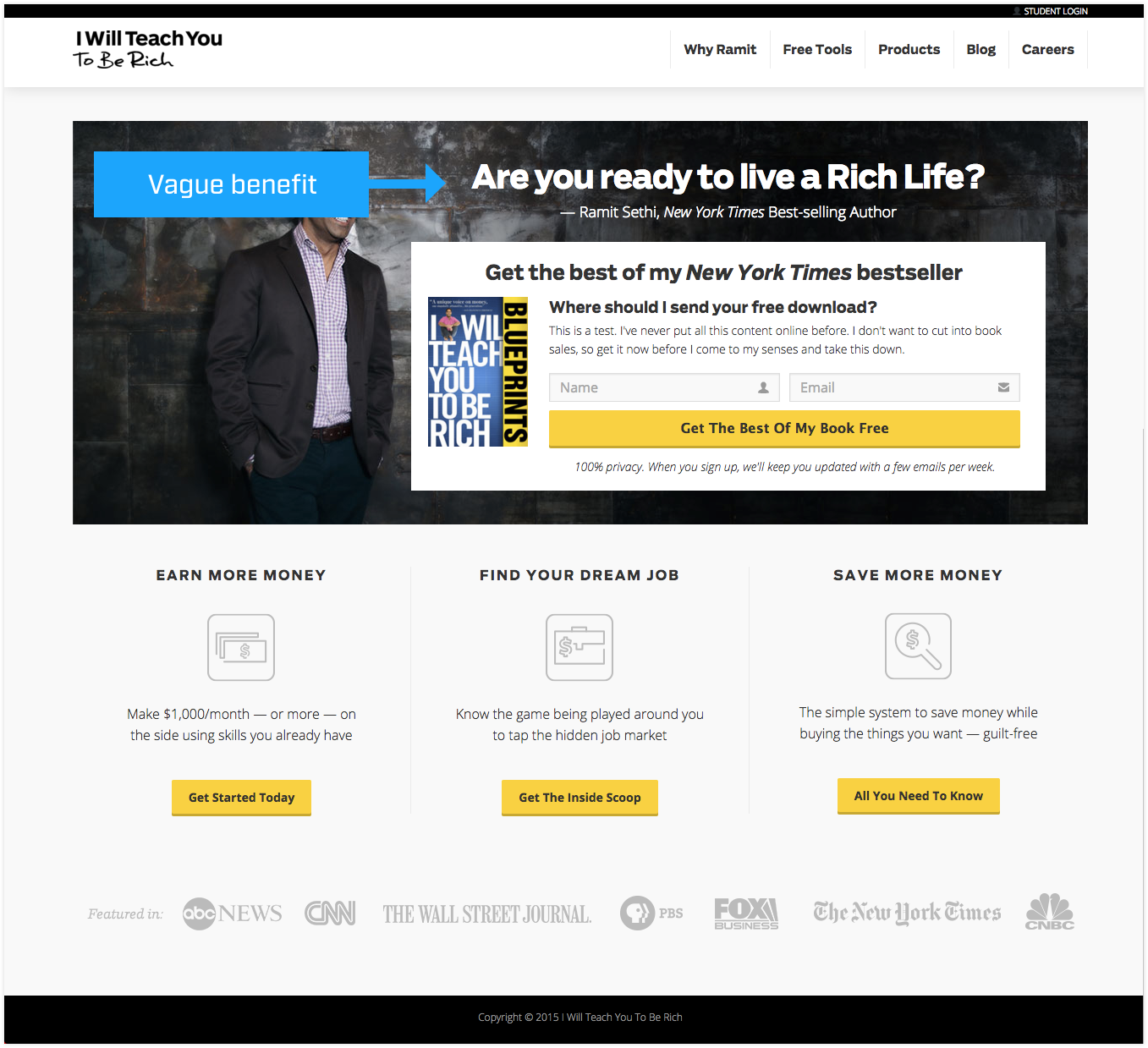

For our test, we had the vague benefit “Are You Ready to Live a Rich Life?” as our control:

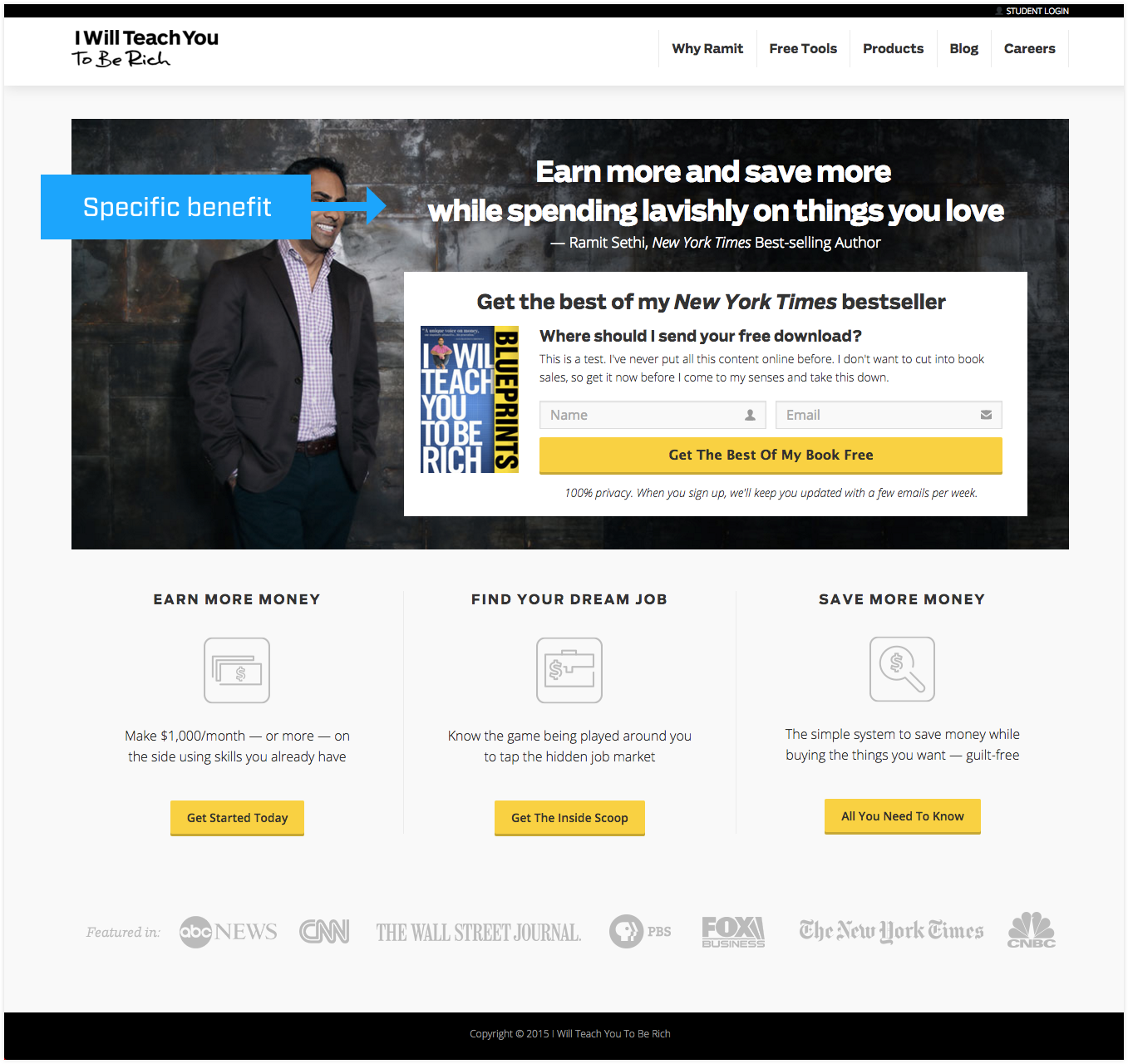

We put it up against the specific benefits of “Earn more, save more, and spend lavishly on things you love” shown here:

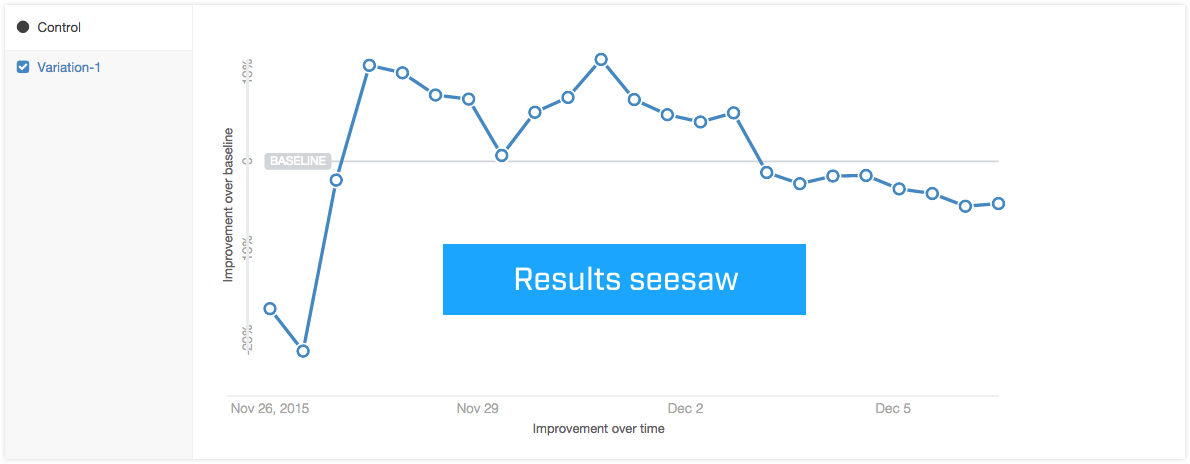

The benefits version showed gains against the control in the beginning. Then it dropped below.

After a week, we stopped the test because we believed that this seesaw pattern would continue.

This is the “boring” part of A/B testing that testing evangelists fail to mention: Most tests do nothing. Results bounce up and down from day to day. Definitive winners are few and far between.

Lessons learned: Most articles you read on how someone multiplied conversions by adding one word to a headline or misspelling something on purpose aren’t telling the entire story.

They either showed a day when everything was on a temporary upswing or they declared a winner too early.

We always use 99% significance as our threshold. Anything below that, and the results aren’t reliable.

Test #3: A “foot in the door” vs. “the big ask”

Influence by Dr. Robert Cialdini is one of the most popular books for marketers and salespeople. You can’t find a recommended reading list that doesn’t have it.

In it, Cialdini explains how once people do a small action, they’re more likely to later commit to a bigger action.

For example, a team of researchers in California ran an experiment. They called two groups of women. They asked the first group if they could send 5-6 people over to go through their house for two hours (a big request) to do research. Only a few women said yes.

With the second group of women, they asked if they could answer a few questions (a small request) about household products they use. Three days later they called these women back, reminded them that they had already spoken, and asked if they could send 5-6 people over to go through their house for research.

The result? They were twice as likely as the first group to agree to letting people coming over.

In the world of sales, this means if you can get a “foot in the door,” you have a prospect that’s ready to buy.

Marketers online have used this idea to try and increase conversions. For example, instead of directly typing your name and email into a form, they might have you click on a button that brings you to a signup page.

That first click is a small request. It makes you more likely to give your email address (big request).

We put this idea to the test on our site.

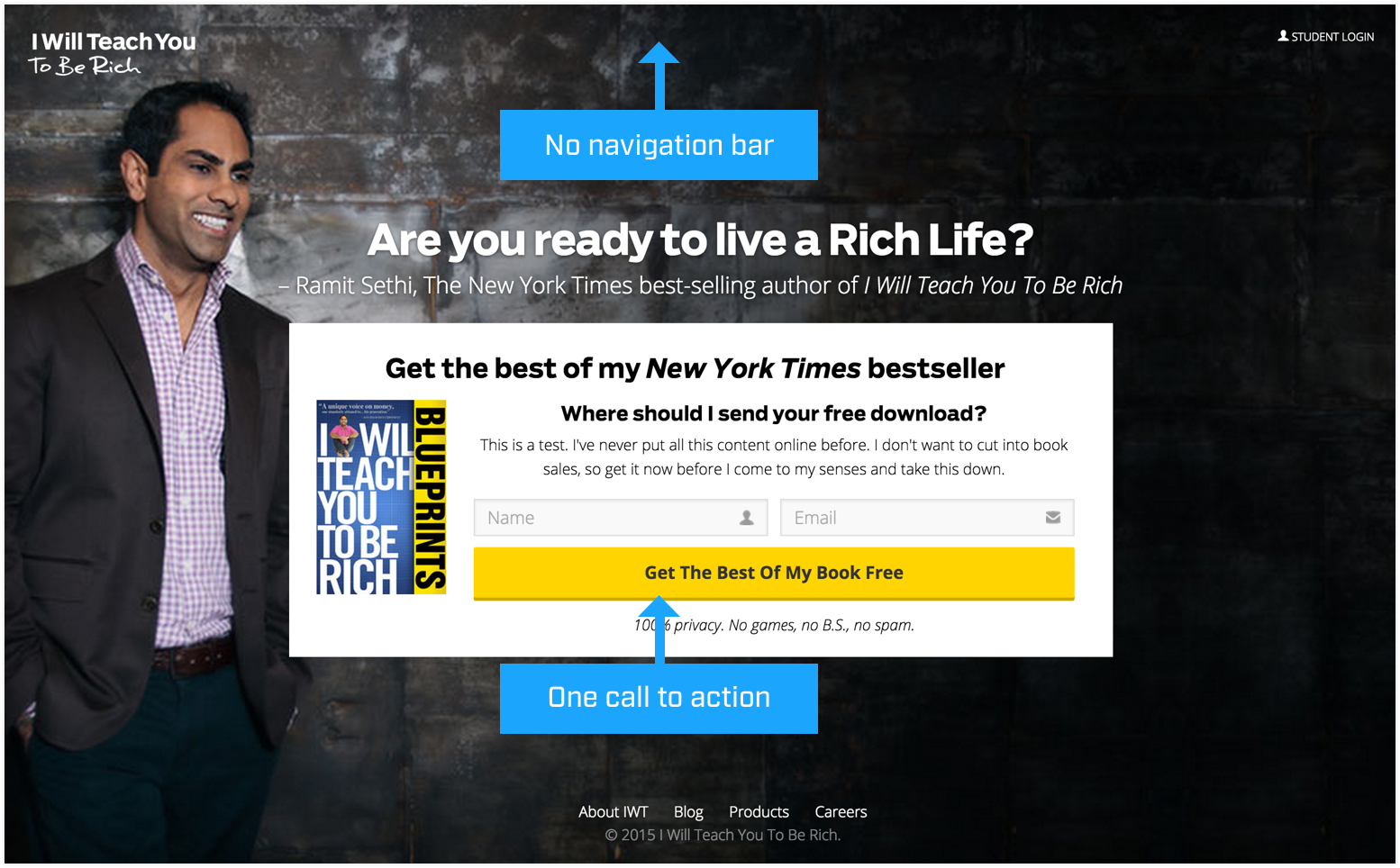

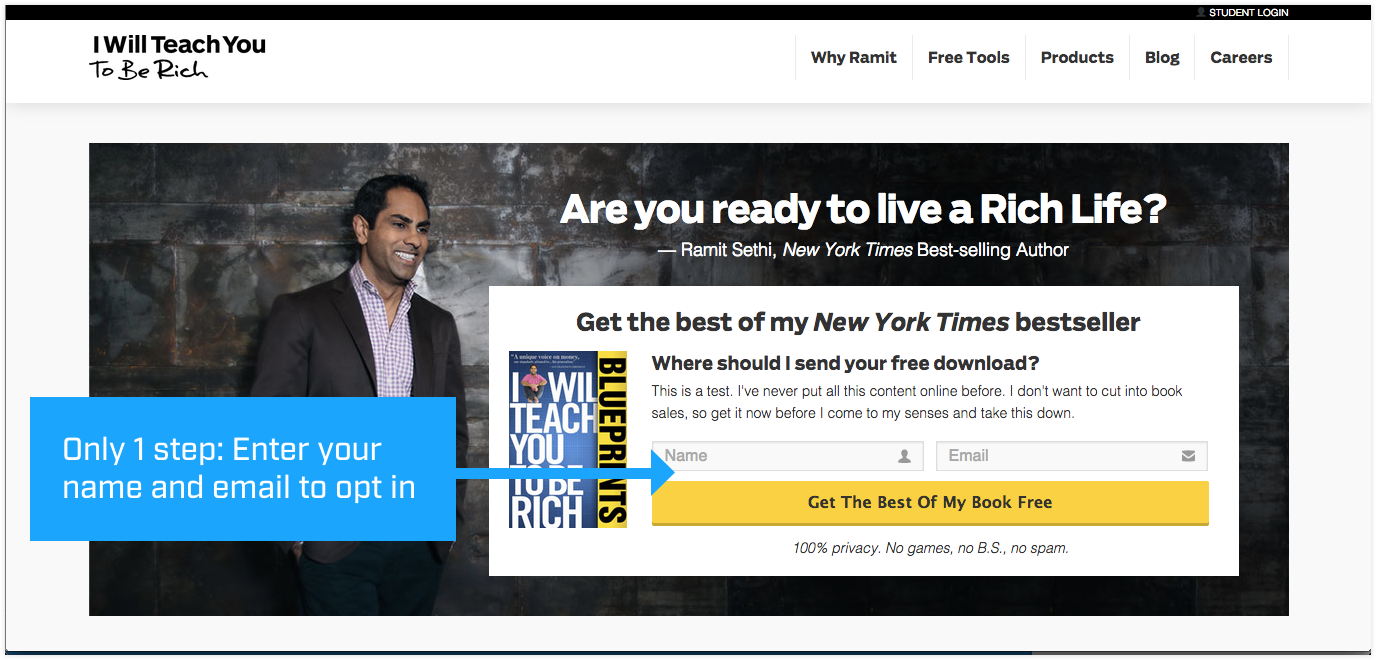

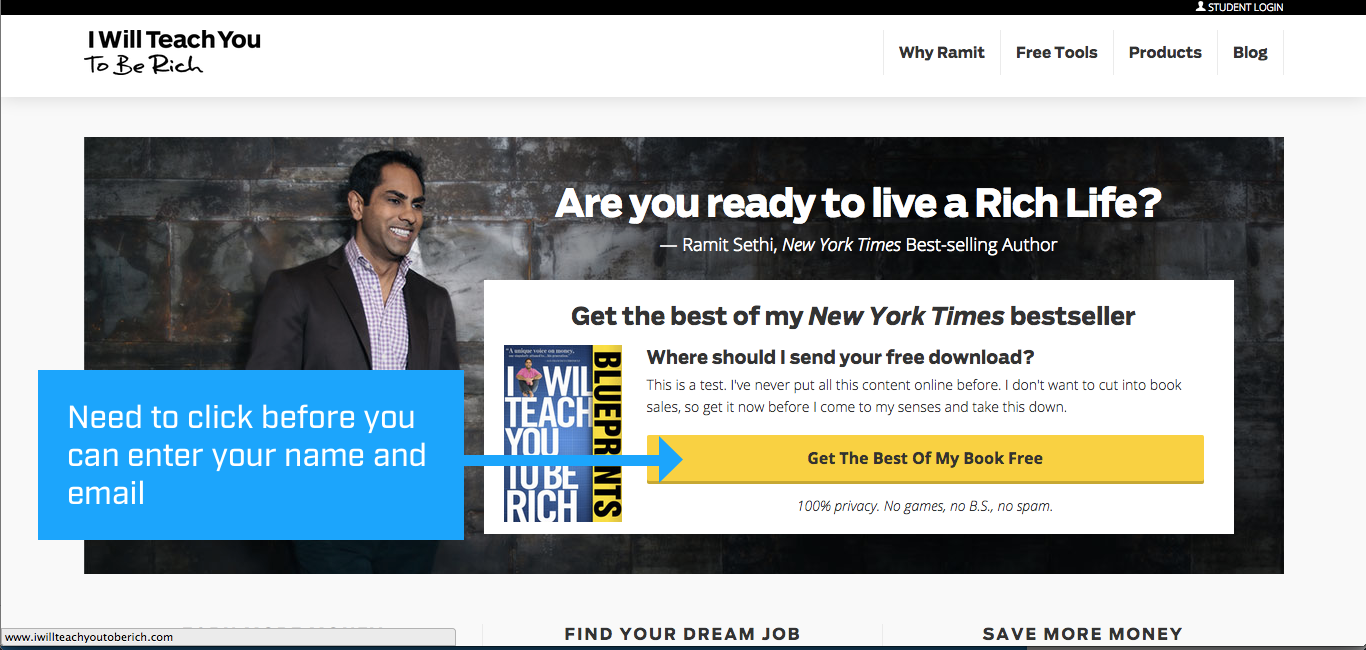

Once again, we had our undisputed heavyweight champ — the control — that asks right away for your name and email:

It went up against the two-step challenger that asked for a small click first:

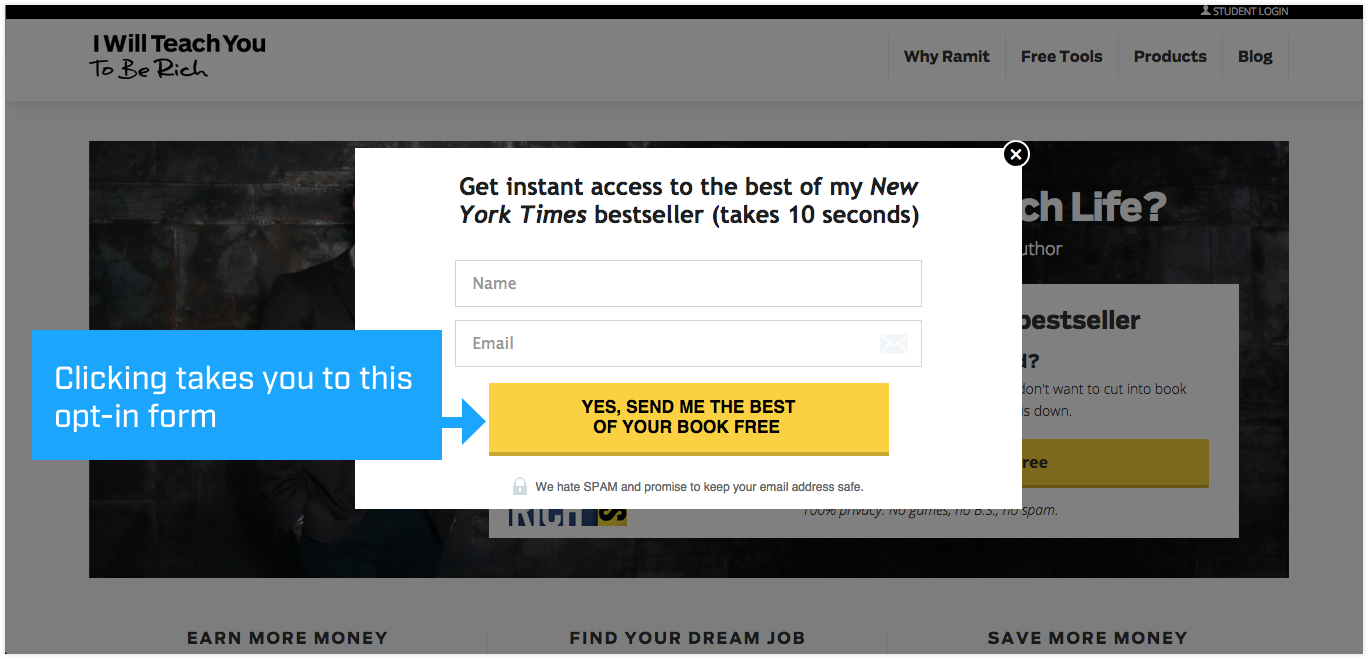

Then it came in with the big ask — your name and email:

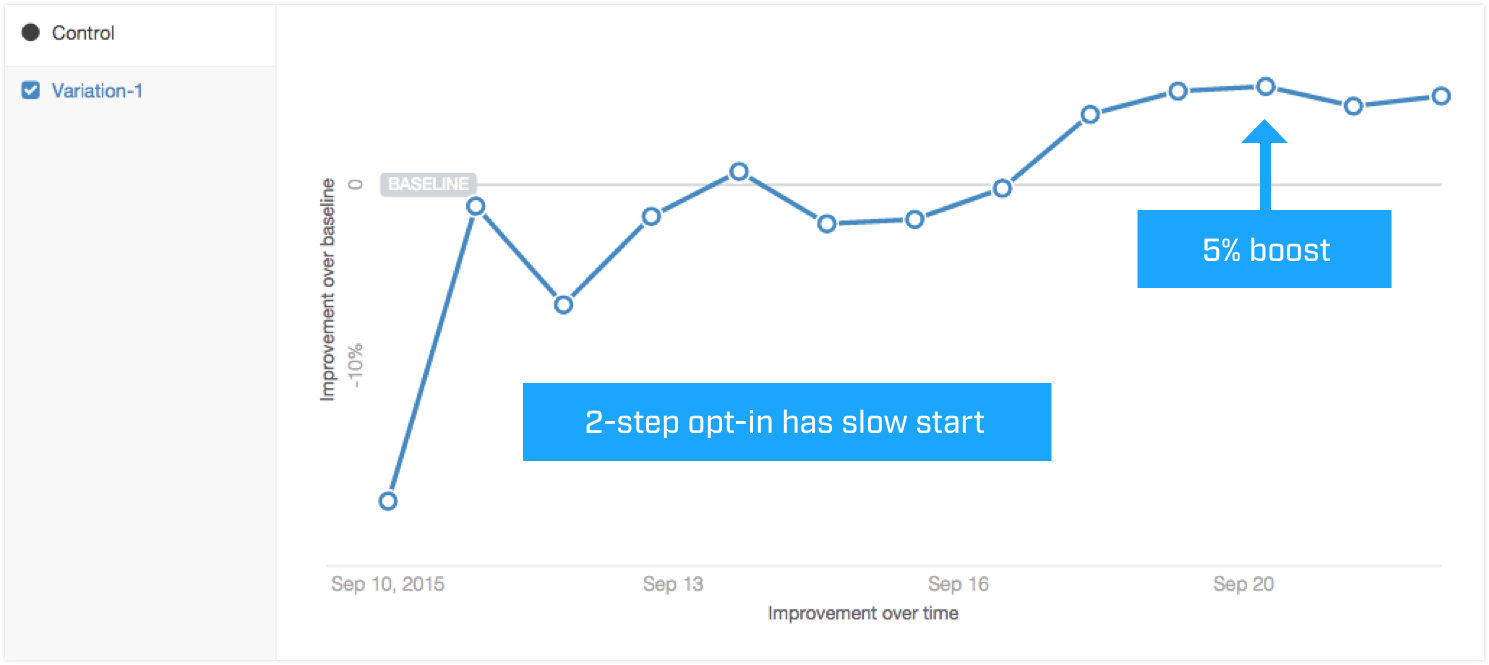

This was a battle of the ages. The two-step challenger started slow but overtook the control after a week:

By then it showed a 5 percent lift in conversions. We decided to kill the test, declare the control the winner, and move on.

Why? We always put the burden of proof on the challenger, which was the 2-step opt-in here. And for that to happen, it must win by more than 10% and hit 99% significance.

We don’t want to spend 6 months chasing 2-5% wins. We can be cycling through 10 different tests in the same time to find a 20% winner.

In this example, the two-step challenger showed a small boost at 89% significance. It would have taken months to reach 99%, if ever. So we moved on.

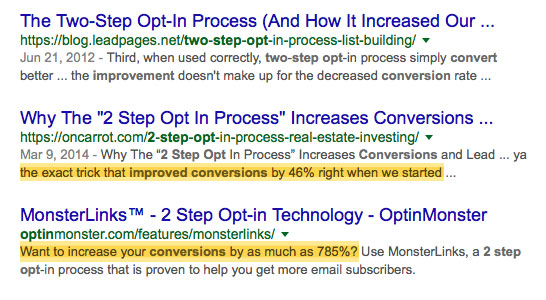

Lessons learned: Tons of case studies and articles say this tactic boosted conversions 60%. I even found one that claimed 785%.

Just Google “2-step opt-in improved conversions” and see for yourself:

Our team suspects these are just examples of extreme results in the first few days. If they waited longer, they would have come back down to earth. They might’ve even turned into losers.

We also don’t know what level of significance they used. Again, we always use 99% as our rule. Anything less, and you might be deceiving yourself.

Lastly, our team noticed that everyone boasting these awesome results sold A/B testing software. Even if they were being totally honest, they had tens of thousands of tests to sift through to cherry-pick the perfect winner for their blog post.

So next time you want to run a fancy A/B test, think twice.

It could cost you.

Ready to improve your habits and level up your life? Download our FREE Ultimate Guide To Habits below.

Written by Ramit Sethi

Host of Netflix's "How to Get Rich", NYT Bestselling Author & host of the hit I Will Teach You To Be Rich Podcast. For over 20 years, Ramit has been sharing proven strategies to help people like you take control of their money and live a Rich Life.